What is AI Due Diligence?

-

-

InfoDetail_Editor: Tony

-

3739

- Wx Share

-

-

Due diligence in the HR industry is a must, as is the current AI trend. Design thinking has become the core of the product, and the starting point for AI applications is crucial.

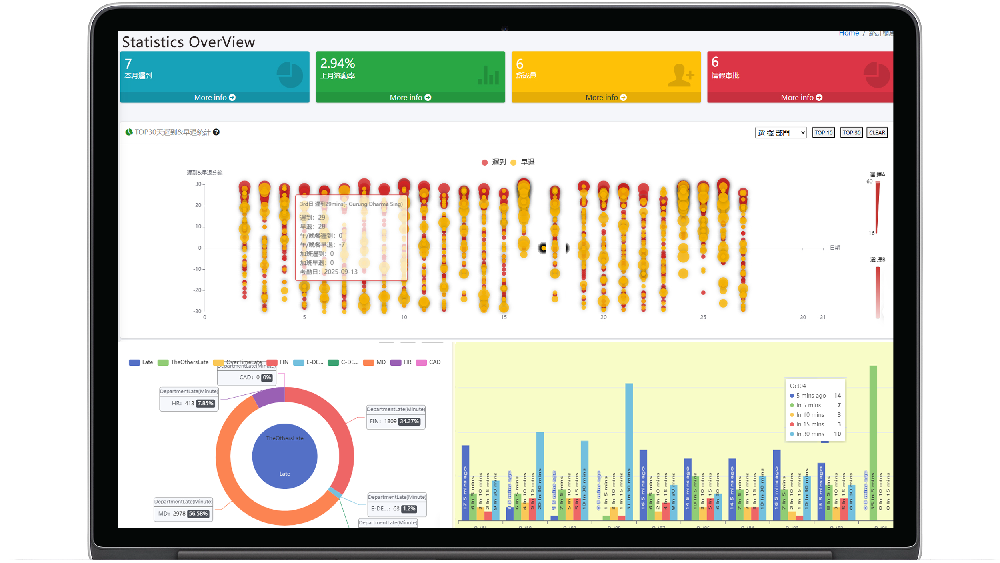

Time & Payroll Suite

Attendance, Payroll and Access Control Solution for Cost Reduction and Productivity Gains Details

All‑in‑one automated timekeeping and payroll system with robust, secure processes to guarantee payroll integrity. Includes leave management, shift rostering, multi‑site and chain support, multi‑ledger accounting, and comprehensive modules.

What is AI due diligence?

Artificial intelligence (AI) due diligence refers to using artificial intelligence (AI) technologies to enhance and automate the traditional due diligence process.

This innovative approach is changing how organizations conduct comprehensive investigations before making important business decisions.

At its core, AI due diligence leverages advanced technologies such as machine learning, natural language processing (NLP), and data analytics to efficiently evaluate information. These tools process information far faster than human review.

In the M&A field, the value of AI due diligence is increasingly prominent. While technical due diligence still lags behind AI applications, legal teams have begun using AI to streamline the due diligence process.

Key advantages of AI due diligence include:

Rapid analysis of massive amounts of documents

Identification of patterns and anomalies

Reduction of human error

Cost and time savings

If implemented correctly, AI systems can analyze both structured data (such as financial spreadsheets) and unstructured information (such as contracts and emails). This comprehensive analysis helps identify potential risks and opportunities that might otherwise be overlooked.

Organizations must handle AI due diligence with caution. Experts recommend handling AI technologies with care and maintaining human oversight of the process, rather than blindly trusting the results of AI.

The effectiveness of AI-driven due diligence varies depending on the specific circumstances. For smaller, privately owned companies with limited online operations, AI-driven due diligence is less effective because there is limited publicly available data available for analysis.

AI Due Diligence: Technical Concepts and Practical Methods

AI Due Diligence (AI DD) is a comprehensive assessment process for artificial intelligence systems or AI-related projects/enterprises. Its core objective is to identify technical risks, verify technical feasibility, evaluate the alignment of business value, and ensure AI applications comply with regulatory requirements, ethical standards, and business goals. With the deep penetration of AI technology in finance, healthcare, industry, and other sectors, AI DD has become a critical link in corporate mergers and acquisitions, pre-project implementation, and regulatory compliance reviews. Its assessment scope covers multiple dimensions including technical fundamentals, data governance, ethical compliance, and commercial implementation, distinguishing it from traditional IT system due diligence (which focuses more on functionality and stability).

I. Technical Concepts of AI Due Diligence

1. Core Definition and Assessment Objectives

AI DD is essentially a "quantitative investigation of technical risks and value." It verifies the "reliability, security, compliance, and sustainability" of AI systems through systematic methodologies, ultimately addressing three core questions:

- Technical perspective: Is the AI system truly "usable" (meeting performance standards), "easy to use" (stability/maintainability), and "secure" (no vulnerabilities/biases)?

- Compliance perspective: Does it adhere to data privacy regulations (e.g., GDPR, China's Personal Information Protection Law), algorithmic transparency requirements (e.g., hierarchical supervision under the EU AI Act), and ethical guidelines (e.g., avoiding discriminatory decisions)?

- Commercial perspective: Is the technology aligned with business objectives? Does it have large-scale implementation capabilities? Are subsequent iteration costs controllable?

2. Core Assessment Dimensions (Technical Perspective)

The technical assessment of AI DD unfolds around the "AI system lifecycle," covering the entire chain from data input to model output. Key dimensions are as follows:

| Assessment Dimension | Core Focus Areas | Risk Examples |

|---|---|---|

| Data Governance | Legitimacy of data sources, data quality (completeness/accuracy/timeliness), data annotation quality, data privacy protection | Unauthorized data collection (privacy violations), high annotation error rates (model biases) |

| Model Technology | Rationality of model architecture, performance metrics (accuracy/recall/F1-score), generalization ability, interpretability | Model overfitting (sharp performance decline in real scenarios), black-box models (unable to trace decisions) |

| Systems Engineering | Model deployment architecture (cloud/edge), computing power support, stability (failure rate/response time), scalability | Excessively high computing costs (infeasible for scaling), response delays under high concurrency |

| Ethics and Fairness | Algorithmic biases (e.g., gender/racial discrimination), decision transparency, impact assessment on vulnerable groups | Recruitment AI scoring female candidates lower, loan AI discriminating against low-income groups |

| Compliance | Alignment with regional AI regulatory requirements (e.g., high-risk AI list under the EU AI Act), industry standards (e.g., FDA certification for medical AI) | Failure to declare high-risk AI applications (regulatory penalties), medical AI not passing clinical validation |

II. Practical Methods of AI Due Diligence

AI DD practice combines "technical verification tools" with "process-oriented methodologies," typically divided into three phases: Preparation Phase, Execution Phase, and Reporting Phase. While priorities vary by scenario (e.g., investment M&A, pre-project launch, regulatory review), the core process remains consistent.

1. Preparation Phase: Define Assessment Scope and Benchmarks

This phase aligns "assessment objectives" with "assessment resources" to avoid inefficient open-ended investigations. Key actions include:

- Define assessment boundaries: Identify priority dimensions based on the scenario (e.g., focusing on "technical barriers and commercialization capabilities" when investing in AI enterprises, and "compliance and security" for pre-launch medical AI).

- Establish assessment benchmarks: Clarify qualified standards for key indicators (e.g., classification model accuracy ≥ 95%, data annotation error rate ≤ 2%, response time ≤ 500ms), referencing industry standards (e.g., NIST AI Risk Management Framework) or business needs.

- Collect basic materials: Request the assessed party to provide AI system documentation (model architecture diagrams, data flow charts), data source certifications, compliance credentials (e.g., ISO 24089), and historical performance reports.

2. Execution Phase: Multi-Dimensional Technical Verification (Core Link)

The execution phase is the core of AI DD, requiring penetrative assessment across dimensions through a combination of "document review, technical testing, and interview verification." Specific methods are as follows:

(1) Data Governance Assessment: Identify Risks at the "Source"

Data is the "fuel" of AI, and data issues directly lead to model failure. Assessment methods include:

- Data legality review:

- Verify data authorization documents (e.g., user informed consent forms, third-party data procurement contracts) to ensure no "black-market data" or "unauthorized crawled data."

- Validate data desensitization effectiveness (e.g., whether ID numbers and phone numbers meet anonymization standards to avoid "re-identification" risks).

- Data quality testing:

- Quantitatively analyze data completeness (missing value ratio; e.g., key feature missing rate > 5% requires attention), accuracy (consistency with real-scenario data, e.g., deviations between user profile data and actual behavior), and timeliness (data update frequency; e.g., real-time recommendation AI requires data updates within 24 hours).

- Sampling inspection of annotation quality: Randomly select 10%-20% of annotated data for re-annotation by humans or third-party tools, and calculate the "annotation consistency rate" (e.g., IOU matching degree ≥ 90% for object detection tasks).

(2) Model Technology Assessment: Verify "Capability" and "Reliability"

The model is the "core engine" of AI, and assessment must balance "performance" and "robustness" (anti-interference ability). Methods include:

- Performance metric reproduction:

- Re-run the model using test datasets provided by the assessed party to verify consistency between key indicators (e.g., accuracy/recall for classification tasks, BLEU score/perplexity for generative AI) and reported results, avoiding "test set overfitting" (i.e., strong performance only on specific datasets but failure in real scenarios).

- Supplement "real-scenario testing": Use "wild data" not involved in model training (e.g., enterprise actual business data) to test generalization ability. If performance declines by more than 10%, analyze the cause (e.g., significant differences between training data and real scenarios).

- Model robustness testing:

- Adversarial testing: Observe whether model decisions fail when minor interferences are added (e.g., modifying 1% of pixels in image AI, replacing synonyms in NLP models) (e.g., face recognition AI false recognition rate ≤ 0.1% under slight occlusion).

- Extreme scenario testing: Simulate edge cases (e.g., financial AI encountering "abnormal transaction data," autonomous driving AI encountering "rainstorm + backlight scenarios") to verify whether the model crashes or outputs dangerous decisions.

- Model interpretability assessment:

- For "black-box models" (e.g., deep learning), use tools (e.g., LIME, SHAP) to analyze the impact of key features on decisions (e.g., whether a loan AI rejects a user based on "income" rather than "gender").

- Request model iteration records (e.g., version update logs, performance trend changes) to assess the model's "iterability" (e.g., whether incremental training is supported to avoid full-data retraining for each update).

(3) Systems Engineering Assessment: Ensure "Implementation" Feasibility

AI systems rely on engineering architecture for commercial implementation, with assessment focusing on "stability" and "cost controllability." Methods include:

- Deployment architecture review:

- Analyze architecture diagrams to confirm alignment with business scenarios (e.g., edge AI requires verifying whether terminal device computing power can support model operation; cloud AI requires assessing server load balancing capabilities).

- Verify computing power costs: Calculate unit costs (e.g., ≤ 10 yuan per 1,000 inferences) based on model parameter size (e.g., 10B-parameter large models) and inference request volume to determine profitability after scaling.

- Stability and security testing:

- Stress testing: Simulate high-concurrency scenarios (e.g., e-commerce AI recommendation systems during "Double 11" peak traffic) to observe response time and failure rate (e.g., request failure rate ≤ 0.01%).

- Security vulnerability scanning: Use AI security tools (e.g., IBM AI Explainability 360, OWASP AI Security Top 10) to identify vulnerabilities such as model poisoning (data contamination) and model theft (reverse engineering of parameters via APIs).

(4) Ethics and Compliance Assessment: Avoid "Hidden" Risks

Ethics and compliance are the "bottom-line requirements" of AI DD, especially for high-risk fields (healthcare, finance, justice). Assessment methods include:

- Algorithmic bias detection:

- Test model decision results by stratifying sensitive attributes (gender, age, region) and calculate "fairness metrics" (e.g., equal opportunity rate, demographic parity rate). If the rejection rate of a group is more than 20% higher than others, investigate potential biases.

- Example: Recruitment AI must verify that "the pass rate difference between male and female candidates ≤ 5%" to avoid gender discrimination.

- Compliance verification:

- Align with regional regulations (e.g., the EU AI Act classifies "biometrics, medical diagnosis" as high-risk AI, requiring strict transparency and testing requirements) to confirm completion of necessary declarations or certifications.

- Verify ethical review records (e.g., whether an AI ethics committee is established, and whether manual review mechanisms exist for high-risk decision scenarios).

(5) Interview Verification: Supplement "Non-Documentary" Information

Technical documents may be "glossed over" or incomplete. Verify information authenticity by interviewing key personnel (AI algorithm engineers, data managers, operation and maintenance staff):

- Interview algorithm engineers: Understand the model training process (e.g., whether "shortcut features" were used leading to poor generalization) and performance bottlenecks (e.g., excessive model compression due to insufficient computing power).

- Interview data managers: Confirm data update mechanisms and annotation team qualifications (e.g., whether medical data annotation involves professional doctors).

- Interview business managers: Evaluate the alignment between technology and business (e.g., whether the AI recommendation system actually improves user conversion rates rather than just pursuing "click-through rates").

3. Reporting Phase: Output Risks and Recommendations

After the execution phase, a structured AI DD report should be generated, including core content:

- Assessment summary: Briefly describe the assessment scope, methods, and core conclusions (e.g., "The AI system meets data compliance requirements, but model generalization ability is insufficient; training data optimization is needed").

- Risk list: Categorize issues by "high/medium/low" risk levels (e.g., "High risk: 15% data annotation error rate leading to substandard model accuracy; Medium risk: Response time exceeding 1s affecting user experience").

- Improvement recommendations: Provide actionable solutions for risks (e.g., "High annotation error rate: Recommend introducing a third-party annotation review mechanism; Poor generalization: Suggest supplementing real-scenario data for incremental training").

- Conclusion and decision support: Give clear recommendations based on assessment results (e.g., "Recommend investing in the AI enterprise, but require data governance optimization within 3 months; Do not recommend medical AI launch until additional clinical validation is completed").

III. Key Focus Areas of AI DD by Typical Scenarios

Assessment priorities vary significantly by scenario, requiring targeted methodological adjustments:

| Scenario | Core Assessment Dimensions | Key Focus Metrics |

|---|---|---|

| Investment in AI startups | Technical barriers, commercialization capabilities | Number of model patents, customer retention rate, unit customer acquisition cost |

| Pre-launch of medical AI products | Compliance, security, clinical effectiveness | FDA/NMPA certification, clinical test accuracy, data privacy protection |

| Corporate AI project M&A | Technical integration difficulty, team stability | Compatibility with existing systems, retention rate of core algorithm engineers |

| Regulatory AI review | Compliance, ethical fairness | Alignment with high-risk AI lists, algorithm bias rate, decision transparency |

IV. Tool Support for AI DD

Professional tools can enhance assessment efficiency and accuracy:

- Data quality tools: Talend (data completeness analysis), Labelbox (annotation quality verification);

- Model testing tools: H2O.ai (model performance reproduction), IBM Adversarial Robustness Toolbox (adversarial testing);

- Compliance and ethics tools: Microsoft Fairlearn (fairness detection), NIST AI Risk Management Framework (risk quantification);

- Systems engineering tools: JMeter (stress testing), Prometheus (stability monitoring).

Summary

AI Due Diligence is a critical means to balance AI technical value and risks. Its core logic is full-chain verification "from data to models, from technology to compliance, and from documents to practice." As AI regulation tightens and technical complexity increases, AI DD must combine "the quantitative capabilities of technical tools" with "the qualitative judgment of industry experience" to effectively identify hidden risks and ensure the sustainable implementation of AI applications. In the future, with the development of generative AI and multimodal AI, AI DD will add assessment dimensions such as "authenticity of generated content" and "consistency of cross-modal data," requiring continuous methodological iteration to adapt to technological evolution.

Software Service

Industry information

- Views 8944

- Author :Tony

- Views 603

- Author :Tony

- Views 1661

- Author :Bruce Lee

- Views 41

- Author :Tony

- Views 15

- Author :Bruce Lee

- Views 29

- Author :Tony

Hardware & Software Support

We are deeply rooted in Hong Kong’s local services, specializing in hardware and software issues.

System Integration

Hardware and software system integration to enhance stability and reliability.

Technical Support

Professional technical team providing support.

Professional Technical Services

Focused on solving problems with cutting‑edge technologies.